Diabetes Analysis and Detection with Statistics and Machine Learning#

Objective Statement#

The primary objective of this project is to develop a predictive model capable of classifying individuals based on their diabetes status, leveraging a variety of health-related and sociodemographic factors. This endeavor seeks not only to categorize individuals as diabetic or non-diabetic but also aims to quantify the risk of diabetes through probabilistic predictions.

This constitutes a supervised learning task, wherein we employ a dataset with predefined labels (diabetes presence or absence) to train our model. The nature of the response variable—binary, indicating the presence or absence of diabetes—positions this as a binary classification problem.

To achieve these objectives, we will harness the power of Machine Learning techniques. These methodologies will enable us to sift through the complex interplay of variables and identify patterns that significantly contribute to the likelihood of diabetes, thus facilitating more accurate predictions and risk assessments.

Requirements#

import numpy as np

import pandas as pd

import polars as pl

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_theme(style="whitegrid")

import sys

import pickle

from sklearn.model_selection import train_test_split, StratifiedKFold

from sklearn.preprocessing import OrdinalEncoder, StandardScaler

from sklearn.compose import ColumnTransformer

from sklearn.pipeline import Pipeline

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier, HistGradientBoostingClassifier, BaggingClassifier, GradientBoostingClassifier, ExtraTreesClassifier, StackingClassifier

from sklearn.svm import LinearSVC

from sklearn.neural_network import MLPClassifier

from xgboost import XGBClassifier

from sklearn.metrics import accuracy_score, balanced_accuracy_score, confusion_matrix, ConfusionMatrixDisplay, f1_score, recall_score, precision_score

from sklearn.model_selection import cross_val_score, GridSearchCV, RandomizedSearchCV

from sklearn.impute import SimpleImputer

from sklearn.feature_selection import SelectKBest, f_classif

from imblearn.pipeline import Pipeline as ImblearnPipeline

from imblearn.under_sampling import RandomUnderSampler, NearMiss

from imblearn.over_sampling import RandomOverSampler, SMOTE

from imblearn.combine import SMOTETomek

from PIL import Image

import statsmodels.api as sm

import joblib

sys.path.insert(0, 'C:/Users/fscielzo/Documents/DataScience-GitHub/EDA')

from EDA import dtypes_df, change_type, prop_cols_nulls, corr_matrix, outliers_table, histogram_matrix, barplot_matrix, scatter_matrix, quant_to_cat, summary, histogram, freq_table, boxplot, ecdfplot, boxplot, boxplot_matrix, boxplot_2D_matrix, stripplot_matrix, histogram_2D_matrix, ecdf_2D_matrix, cross_quant_cat_summary, contingency_table_2D, ecdf_matrix

sys.path.insert(0, r'C:\Users\fscielzo\Documents\DataScience-GitHub\Regression\ML')

from PyML import encoder, scaler, features_selector, imputer, SimpleEvaluation, predictive_plots, predictive_intervals, OptunaSearchCV

Why Polars instead of Pandas?

FabioScielzoOrtiz/Spark_Master_Plot

'''

diabetes_df = pl.read_csv('diabetes_NaNs.csv')

replace_dict = {}

replace_dict['Income'] = {'1.0': '[0, 10k)', '2.0': '[10k,15k)', '3.0': '[15k, 20k)',

'4.0': '[20k,25k)', '5.0': '[25k,35k)', '6.0': '[35k,50k)',

'7.0': '[50k,75k)', '8.0': '[75k, inf)'}

replace_dict['Education'] = {'1.0': 'Never', '2.0': 'Elementary', '3.0': 'SomeHighSchool',

'4.0': 'HighSchool', '5.0': 'SomeCollege', '6.0': 'CollegeGraduate'}

replace_dict['Age'] = {'1.0': '[18, 25)', '2.0': '[25,30)', '3.0': '[30,35)',

'4.0': '[35,40)', '5.0': '[40,45)', '6.0': '[45,50)', '7.0': '[50,55)',

'8.0': '[55,60)', '9.0': '[60,65)', '10.0': '[65,70)', '11.0': '[70,75)',

'12.0': '[75,80)', '13.0': '[80,inf)'}

replace_dict['Sex'] = {'0.0': 'Female', '1.0': 'Male'}

replace_dict['DiffWalk'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['GenHlth'] = {'0.0': 'Excellent', '1.0': 'VeryGood', '2.0': 'Good',

'3.0': 'Fair', '4.0': 'Poor'}

replace_dict['NoDocbcCost'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['AnyHealthcare'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['HvyAlcoholConsump'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['Veggies'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['Fruits'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['PhysActivity'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['HeartDiseaseorAttack'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['Stroke'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['Smoker'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['CholCheck'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['HighChol'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['HighBP'] = {'0.0': 'No', '1.0': 'Yes'}

replace_dict['Diabetes_binary'] = {'0.0': 'No', '1.0': 'Yes'}

for col in replace_dict.keys():

diabetes_df = diabetes_df.with_columns(diabetes_df[col].cast(str).alias(col))

diabetes_df = diabetes_df.with_columns(pl.col(col).replace(replace_dict[col]).alias(col))

# diabetes_df.write_csv('diabetes_session03.csv')

'''

"\ndiabetes_df = pl.read_csv('diabetes_NaNs.csv')\n\nreplace_dict = {}\nreplace_dict['Income'] = {'1.0': '[0, 10k)', '2.0': '[10k,15k)', '3.0': '[15k, 20k)',\n '4.0': '[20k,25k)', '5.0': '[25k,35k)', '6.0': '[35k,50k)', \n '7.0': '[50k,75k)', '8.0': '[75k, inf)'}\nreplace_dict['Education'] = {'1.0': 'Never', '2.0': 'Elementary', '3.0': 'SomeHighSchool',\n '4.0': 'HighSchool', '5.0': 'SomeCollege', '6.0': 'CollegeGraduate'}\nreplace_dict['Age'] = {'1.0': '[18, 25)', '2.0': '[25,30)', '3.0': '[30,35)',\n '4.0': '[35,40)', '5.0': '[40,45)', '6.0': '[45,50)', '7.0': '[50,55)',\n '8.0': '[55,60)', '9.0': '[60,65)', '10.0': '[65,70)', '11.0': '[70,75)',\n '12.0': '[75,80)', '13.0': '[80,inf)'}\nreplace_dict['Sex'] = {'0.0': 'Female', '1.0': 'Male'}\nreplace_dict['DiffWalk'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['GenHlth'] = {'0.0': 'Excellent', '1.0': 'VeryGood', '2.0': 'Good',\n '3.0': 'Fair', '4.0': 'Poor'}\nreplace_dict['NoDocbcCost'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['AnyHealthcare'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['HvyAlcoholConsump'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['Veggies'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['Fruits'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['PhysActivity'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['HeartDiseaseorAttack'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['Stroke'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['Smoker'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['CholCheck'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['HighChol'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['HighBP'] = {'0.0': 'No', '1.0': 'Yes'}\nreplace_dict['Diabetes_binary'] = {'0.0': 'No', '1.0': 'Yes'}\n\nfor col in replace_dict.keys():\n diabetes_df = diabetes_df.with_columns(diabetes_df[col].cast(str).alias(col))\n diabetes_df = diabetes_df.with_columns(pl.col(col).replace(replace_dict[col]).alias(col))\n\n# diabetes_df.write_csv('diabetes_session03.csv')\n"

Introduction#

Diabetes stands as a paramount challenge within the realm of public health in the United States, affecting millions and imposing a considerable economic toll. This chronic condition undermines the body’s ability to regulate blood glucose levels effectively, which is critical for energy production and overall health. Following digestion, the breakdown of foods into sugars results in their release into the bloodstream, triggering the pancreas to secrete insulin. This hormone is essential for cells to utilize blood sugar for energy. The hallmark of diabetes is the body’s inadequate insulin production or utilization, leading to potential reductions in both quality of life and life expectancy.

The disease is associated with severe complications, including heart disease, vision loss, lower-limb amputations, and kidney disease, stemming from prolonged elevated blood sugar levels. Although incurable, diabetes management strategies—such as weight management, healthy eating, physical activity, and medical treatment—can significantly reduce its adverse effects. Early detection is crucial, enabling lifestyle modifications and enhanced treatment effectiveness, thus underscoring the importance of predictive models for assessing diabetes risk.

Acknowledging the magnitude of diabetes is vital. The Centers for Disease Control and Prevention (CDC) reports that, as of 2018, approximately 34.2 million Americans live with diabetes, and an additional 88 million are in a prediabetic state. Alarmingly, a significant proportion of individuals with diabetes or prediabetes remain unaware of their condition, with estimates suggesting that 1 in 5 diabetics and nearly 80% of prediabetics are undiagnosed. While diabetes manifests in various forms, type II diabetes is the most prevalent, influenced by a range of factors including age, education, income, geographic location, race, and other social determinants of health. The socioeconomic disparity in diabetes prevalence is noteworthy, disproportionately affecting those of lower socioeconomic status.

The economic implications of diabetes are staggering, with direct medical costs for diagnosed diabetes reaching approximately \(327 billion annually. When accounting for undiagnosed diabetes and prediabetes, total expenditures near \)400 billion, highlighting the urgent need for effective public health strategies and interventions to combat this escalating health crisis.

Data#

The Behavioral Risk Factor Surveillance System (BRFSS), an annual health-related telephone survey administered by the Centers for Disease Control and Prevention (CDC), systematically gathers data from over 400,000 Americans. This comprehensive survey, initiated in 1984, explores health-related risk behaviors, chronic health conditions, and the utilization of preventive services.

For the purposes of this study, we have utilized a subset of the BRFSS 2015 dataset, made available on Kaggle. This specific subset encompasses responses from 441,455 participants and includes a rich array of 330 features. These features comprise direct questions posed to respondents as well as derived variables calculated from the responses of individual participants.

The dataset in question encapsulates 250,000 responses from the 2015 iteration of the CDC’s BRFSS and features 22 variables meticulously extracted from the survey. The variables included offer a comprehensive overview of the participants’ health status and behaviors, serving as the foundation for this project’s analysis. The table below conceptually summarizes the variables within the dataset, delineating the scope of our exploration and analysis throughout this project.

Variable Name |

Description |

Type |

|---|---|---|

|

0 = no diabetes ; 1 = prediabetes or diabetes |

Binary |

|

0 = no high blood pressure ; 1 = high blood pressure |

Binary |

|

0 = no high cholesterol ; 1 = high cholesterol |

Binary |

|

0 = no cholesterol check in 5 years ; 1 = yes cholesterol check in 5 years |

Binary |

|

Body Mass Index |

Quantitative |

|

Have you smoked at least 100 cigarettes in your entire life? [Note: 5 packs = 100 cigarettes] 0 = no ; 1 = yes |

Binary |

|

Have you had a stroke (ictus). 0 = no ; 1 = yes |

Binary |

|

coronary heart disease (CHD) or myocardial infarction (MI). 0 = no ; 1 = yes |

Binary |

|

physical activity in past 30 days - not including job. 0 = no ; 1 = yes |

Binary |

|

Consume Fruit 1 or more times per day. 0 = no ; 1 = yes |

Binary |

|

Consume Vegetables 1 or more times per day. 0 = no ; 1 = yes |

Binary |

|

(adult men >=14 drinks per week and adult women>=7 drinks per week). 0 = no ; 1 = yes |

Binary |

|

Have any kind of health care coverage, including health insurance, prepaid plans such as HMO, etc. 0 = no ; 1 = yes |

Binary |

|

Was there a time in the past 12 months when you needed to see a doctor but could not because of cost? 0 = no ; 1 = yes |

Binary |

|

Would you say that in general your health is: scale 1-5. 1 = excellent ; 2 = very good ; 3 = good ; 4 = fair ; 5 = poor |

Multiclass |

|

Now thinking about your mental health, which includes stress, depression, and problems with emotions, for how many days during the past 30 days was your mental health not good? scale 1-30 days |

Quantitative |

|

Now thinking about your physical health, which includes physical illness and injury, for how many days during the past 30 days was your physical health not good? scale 1-30 days |

Quantitative |

|

Do you have serious difficulty walking or climbing stairs? 0 = no ; 1 = yes |

Binary |

|

0 = female ; 1 = male |

Binary |

|

13-level age category. 0 = [18, 24] ; 1 = [25 ,29 ] ; 2 = [30 ,34 ] ; 3 = [35 ,39 ] ; 4 = [40 , 44] ; 5 = [ 45, 49] ; 6 = [50 , 54] ; 7 = [55 , 59] ; 8 = [60 , 64] ; 9 = [65 , 69] ; 10 = [70 , 74] ; 11 = [75 , 79] ; 12 = 80 or older |

Multiclass |

|

Education level scale 0-5. 0 = Never attended school or only kindergarten ; 1 = Elementary ; 2 = Some high school ; 3 = High school graduate ; 4 = Some college or technical school ; 5 = College graduate |

Multiclass |

|

Income scale scale 0-7. 0 = lower than 10k ; 1 = [10k, 15k) ; 2 = [15k, 20k) ; 3 = [20k, 25k) ; 4 = [25k, 35k) ; 5 = [35k, 50k) ; 6 = [50k, 75k) ; 7 = higher than 75k |

Multiclass |

The data has been obtained from Kaggle: https://www.kaggle.com/datasets/alexteboul/diabetes-health-indicators-dataset

Reading#

First of all, we read the data.

diabetes_df = pl.read_csv('diabetes_session03.csv')

diabetes_df.head()

| Diabetes_binary | HighBP | HighChol | CholCheck | BMI | Smoker | Stroke | HeartDiseaseorAttack | PhysActivity | Fruits | Veggies | HvyAlcoholConsump | AnyHealthcare | NoDocbcCost | GenHlth | MentHlth | PhysHlth | DiffWalk | Sex | Age | Education | Income |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| str | str | str | str | f64 | str | str | str | str | str | str | str | str | str | str | f64 | f64 | str | str | str | str | str |

| "No" | "Yes" | "Yes" | "Yes" | 40.0 | "Yes" | "No" | "No" | "No" | "No" | "Yes" | "No" | "Yes" | "No" | "5.0" | 18.0 | 15.0 | "Yes" | "Female" | "[60,65)" | "HighSchool" | "[15k, 20k)" |

| "No" | "No" | "No" | "No" | 25.0 | "Yes" | "No" | "No" | "Yes" | "No" | "No" | "No" | "No" | "Yes" | "Fair" | 0.0 | 0.0 | "No" | "Female" | "[50,55)" | "CollegeGraduat… | "[0, 10k)" |

| "No" | "Yes" | "Yes" | "Yes" | 28.0 | "No" | "No" | "No" | "No" | "Yes" | "No" | "No" | "Yes" | "Yes" | "5.0" | 30.0 | 30.0 | "Yes" | "Female" | "[60,65)" | "HighSchool" | "[75k, inf)" |

| "No" | "Yes" | "No" | "Yes" | 27.0 | "No" | "No" | "No" | "Yes" | "Yes" | "Yes" | "No" | "Yes" | "No" | "Good" | 0.0 | 0.0 | "No" | "Female" | "[70,75)" | "SomeHighSchool… | "[35k,50k)" |

| "No" | "Yes" | "Yes" | "Yes" | 24.0 | "No" | "No" | "No" | "Yes" | "Yes" | "Yes" | "No" | "Yes" | "No" | "Good" | 3.0 | 0.0 | "No" | "Female" | "[70,75)" | "SomeCollege" | "[20k,25k)" |

Shape#

We display the shape of the data.

diabetes_df.shape

(253680, 22)

Data types#

We check the variables data types according to polars structure.

with pl.Config(tbl_rows=22):

print(dtypes_df(df=diabetes_df))

shape: (22, 2)

┌──────────────────────┬─────────────┐

│ Columns ┆ Python_type │

│ --- ┆ --- │

│ str ┆ object │

╞══════════════════════╪═════════════╡

│ Diabetes_binary ┆ Utf8 │

│ HighBP ┆ Utf8 │

│ HighChol ┆ Utf8 │

│ CholCheck ┆ Utf8 │

│ BMI ┆ Float64 │

│ Smoker ┆ Utf8 │

│ Stroke ┆ Utf8 │

│ HeartDiseaseorAttack ┆ Utf8 │

│ PhysActivity ┆ Utf8 │

│ Fruits ┆ Utf8 │

│ Veggies ┆ Utf8 │

│ HvyAlcoholConsump ┆ Utf8 │

│ AnyHealthcare ┆ Utf8 │

│ NoDocbcCost ┆ Utf8 │

│ GenHlth ┆ Utf8 │

│ MentHlth ┆ Float64 │

│ PhysHlth ┆ Float64 │

│ DiffWalk ┆ Utf8 │

│ Sex ┆ Utf8 │

│ Age ┆ Utf8 │

│ Education ┆ Utf8 │

│ Income ┆ Utf8 │

└──────────────────────┴─────────────┘

Unique values#

We compute the unique values of each variable, what is useful to get a more real idea of their nature.

n_unique = {}

print('Number of unique values:\n')

for col in diabetes_df.columns :

n_unique[col] = len(diabetes_df[col].unique())

print(col, ':', n_unique[col])

Number of unique values:

Diabetes_binary : 2

HighBP : 2

HighChol : 2

CholCheck : 3

BMI : 84

Smoker : 2

Stroke : 2

HeartDiseaseorAttack : 2

PhysActivity : 2

Fruits : 2

Veggies : 3

HvyAlcoholConsump : 2

AnyHealthcare : 2

NoDocbcCost : 3

GenHlth : 5

MentHlth : 32

PhysHlth : 32

DiffWalk : 2

Sex : 3

Age : 14

Education : 6

Income : 8

for col in diabetes_df.columns :

display(diabetes_df[col].unique())

| Diabetes_binary |

|---|

| str |

| "No" |

| "Yes" |

| HighBP |

|---|

| str |

| "Yes" |

| "No" |

| HighChol |

|---|

| str |

| "Yes" |

| "No" |

| CholCheck |

|---|

| str |

| "No" |

| null |

| "Yes" |

| BMI |

|---|

| f64 |

| 12.0 |

| 13.0 |

| 14.0 |

| 15.0 |

| 16.0 |

| 17.0 |

| 18.0 |

| 19.0 |

| 20.0 |

| 21.0 |

| 22.0 |

| 23.0 |

| … |

| 84.0 |

| 85.0 |

| 86.0 |

| 87.0 |

| 88.0 |

| 89.0 |

| 90.0 |

| 91.0 |

| 92.0 |

| 95.0 |

| 96.0 |

| 98.0 |

| Smoker |

|---|

| str |

| "Yes" |

| "No" |

| Stroke |

|---|

| str |

| "Yes" |

| "No" |

| HeartDiseaseorAttack |

|---|

| str |

| "No" |

| "Yes" |

| PhysActivity |

|---|

| str |

| "Yes" |

| "No" |

| Fruits |

|---|

| str |

| "Yes" |

| "No" |

| Veggies |

|---|

| str |

| null |

| "No" |

| "Yes" |

| HvyAlcoholConsump |

|---|

| str |

| "Yes" |

| "No" |

| AnyHealthcare |

|---|

| str |

| "No" |

| "Yes" |

| NoDocbcCost |

|---|

| str |

| "Yes" |

| "No" |

| null |

| GenHlth |

|---|

| str |

| "Poor" |

| "VeryGood" |

| "Fair" |

| "Good" |

| "5.0" |

| MentHlth |

|---|

| f64 |

| null |

| 0.0 |

| 1.0 |

| 2.0 |

| 3.0 |

| 4.0 |

| 5.0 |

| 6.0 |

| 7.0 |

| 8.0 |

| 9.0 |

| 10.0 |

| … |

| 19.0 |

| 20.0 |

| 21.0 |

| 22.0 |

| 23.0 |

| 24.0 |

| 25.0 |

| 26.0 |

| 27.0 |

| 28.0 |

| 29.0 |

| 30.0 |

| PhysHlth |

|---|

| f64 |

| null |

| 0.0 |

| 1.0 |

| 2.0 |

| 3.0 |

| 4.0 |

| 5.0 |

| 6.0 |

| 7.0 |

| 8.0 |

| 9.0 |

| 10.0 |

| … |

| 19.0 |

| 20.0 |

| 21.0 |

| 22.0 |

| 23.0 |

| 24.0 |

| 25.0 |

| 26.0 |

| 27.0 |

| 28.0 |

| 29.0 |

| 30.0 |

| DiffWalk |

|---|

| str |

| "No" |

| "Yes" |

| Sex |

|---|

| str |

| null |

| "Female" |

| "Male" |

| Age |

|---|

| str |

| "[25,30)" |

| "[75,80)" |

| "[45,50)" |

| "[35,40)" |

| "[70,75)" |

| "[80,inf)" |

| "[18, 25)" |

| "[55,60)" |

| "[60,65)" |

| "[65,70)" |

| "[40,45)" |

| "[30,35)" |

| null |

| "[50,55)" |

| Education |

|---|

| str |

| "SomeHighSchool… |

| "HighSchool" |

| "SomeCollege" |

| "Elementary" |

| "CollegeGraduat… |

| "Never" |

| Income |

|---|

| str |

| "[0, 10k)" |

| "[50k,75k)" |

| "[35k,50k)" |

| "[25k,35k)" |

| "[10k,15k)" |

| "[20k,25k)" |

| "[15k, 20k)" |

| "[75k, inf)" |

Missing values#

Let’s get a general idea of our missing values.

prop_cols_nulls(diabetes_df)

| Diabetes_binary | HighBP | HighChol | CholCheck | BMI | Smoker | Stroke | HeartDiseaseorAttack | PhysActivity | Fruits | Veggies | HvyAlcoholConsump | AnyHealthcare | NoDocbcCost | GenHlth | MentHlth | PhysHlth | DiffWalk | Sex | Age | Education | Income |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 |

| 0.0 | 0.0 | 0.0 | 0.023372 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.067412 | 0.0 | 0.0 | 0.001336 | 0.0 | 0.029675 | 0.026565 | 0.0 | 0.011337 | 0.04188 | 0.0 | 0.0 |

The proportion of missing values is quite low but it will then be decided whether to impute or eliminate the observations containing any of these.

EDA#

In this section we are going to do describe the variables of our data.

Descriptive summary#

First we define the list of the categorical and quantitative variables.

quant_columns = [col for col in diabetes_df.columns if diabetes_df[col].dtype == pl.Float64]

cat_columns = [col for col in diabetes_df.columns if diabetes_df[col].dtype == pl.Utf8]

# Alternative

'''

len_unique = []

columns_df = np.array(diabetes_df.columns)

for col in columns_df :

len_unique.append(len(diabetes_df[col].unique()))

len_unique = np.array(len_unique)

binary_columns = columns_df[len_unique == 2].tolist()

multi_columns = columns_df[(len_unique > 2) & (len_unique <= 14)].tolist()

cat_columns = binary_columns + multi_columns

quant_columns = columns_df[len_unique > 14].tolist()

'''

'\nlen_unique = []\ncolumns_df = np.array(diabetes_df.columns)\nfor col in columns_df :\n len_unique.append(len(diabetes_df[col].unique()))\nlen_unique = np.array(len_unique)\n\nbinary_columns = columns_df[len_unique == 2].tolist()\nmulti_columns = columns_df[(len_unique > 2) & (len_unique <= 14)].tolist()\ncat_columns = binary_columns + multi_columns\nquant_columns = columns_df[len_unique > 14].tolist()\n'

quant_columns

['BMI', 'MentHlth', 'PhysHlth']

cat_columns

['Diabetes_binary',

'HighBP',

'HighChol',

'CholCheck',

'Smoker',

'Stroke',

'HeartDiseaseorAttack',

'PhysActivity',

'Fruits',

'Veggies',

'HvyAlcoholConsump',

'AnyHealthcare',

'NoDocbcCost',

'GenHlth',

'DiffWalk',

'Sex',

'Age',

'Education',

'Income']

We will use the function summary to obtain a descriptive summary of both the categorical and quantitative variables.

This function give us a table with useful metrics for both quantitative and categorical variables.

For quantitative the following metrics are computed:

n_unique: number of unique values of the variable.prop_nan: proportion of missing values of the variable.mean: mean of the variable.std: standard deviation of the variable.min: minimum value of the variable.Q10: 10-quantile of the variable.Q25: 25-quantile of the variable.median:Q75: 75-quantile of the variable.Q90: 90-quantile of the variable.max: maximum value of the variable.kurtosis: the kurtosis of the variable.skew: the skewness of the variable.n_outliers: the number of outlier observations of the variable.n_not_outliers: the number of not outlier observations of the variable.prop_outliers: the proportion of outlier observations of the variable.prop_not_outliers: the proportion of not outlier observations of the variable.

And for categorical, the following:

n_unique: number of unique values of the variable.prop_nan: proportion of missing values of the variable.mode: the mode of the variable.

quant_summary, cat_summary = summary(df=diabetes_df, auto_col=False,

quant_col_names=quant_columns,

cat_col_names=cat_columns)

quant_summary

| BMI | MentHlth | PhysHlth | |

|---|---|---|---|

| n_unique | 84 | 32 | 32 |

| prop_nan | 0.0 | 0.029675 | 0.026565 |

| mean | 28.382364 | 3.185739 | 4.244253 |

| std | 6.608694 | 7.414006 | 8.720454 |

| min | 12.0 | 0.0 | 0.0 |

| Q10 | 22.0 | 0.0 | 0.0 |

| Q25 | 24.0 | 0.0 | 0.0 |

| median | 27.0 | 0.0 | 0.0 |

| Q75 | 31.0 | 2.0 | 3.0 |

| Q90 | 36.0 | 10.0 | 20.0 |

| max | 98.0 | 30.0 | 30.0 |

| kurtosis | 13.997233 | NaN | NaN |

| skew | 2.121991 | NaN | NaN |

| n_outliers | 9847 | 35146 | 39877 |

| n_not_outliers | 243833 | 218534 | 213803 |

| prop_outliers | 0.038817 | 0.138545 | 0.157194 |

| prop_not_outliers | 0.961183 | 0.861455 | 0.842806 |

cat_summary

| Diabetes_binary | HighBP | HighChol | CholCheck | Smoker | Stroke | HeartDiseaseorAttack | PhysActivity | Fruits | Veggies | HvyAlcoholConsump | AnyHealthcare | NoDocbcCost | GenHlth | DiffWalk | Sex | Age | Education | Income | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| n_unique | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 5 | 2 | 2 | 13 | 6 | 8 |

| prop_nan | 0.0 | 0.0 | 0.0 | 0.023372 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.067412 | 0.0 | 0.0 | 0.001336 | 0.0 | 0.0 | 0.011337 | 0.04188 | 0.0 | 0.0 |

| mode | No | No | No | Yes | No | No | No | Yes | Yes | Yes | No | Yes | No | Good | No | Female | [60,65) | CollegeGraduate | [75k, inf) |

Outliers#

Next, we employ the outliers_table function to generate a table encompassing various metrics related to outliers within the quantitative variables.

outliers_table(df=diabetes_df, auto=False, col_names=quant_columns)

| quant_variables | lower_bound | upper_bound | n_outliers | n_not_outliers | prop_outliers | prop_not_outliers |

|---|---|---|---|---|---|---|

| str | f64 | f64 | i64 | i64 | f64 | f64 |

| "BMI" | 13.5 | 41.5 | 9847 | 243833 | 0.038817 | 0.961183 |

| "MentHlth" | -3.0 | 5.0 | 35146 | 211006 | 0.142782 | 0.857218 |

| "PhysHlth" | -4.5 | 7.5 | 39877 | 207064 | 0.161484 | 0.838516 |

This table contain the lower and upper bound from which the outliers are defined, as well as the number and proportion of outliers and not outlier observation of each quantitative variable.

Frequency table#

Here we compute a frequency table for each categorical variable.

diabetes_df_non_cat_NaNs = diabetes_df.drop_nulls(subset=cat_columns)

for x in cat_columns:

try:

display(freq_table(X=diabetes_df_non_cat_NaNs[x]))

except:

print(f'Couldn\'t be computed for {x}.')

| Diabetes_binary: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 188001 | 0.8603 | 188001 | 0.860283 |

| "Yes" | 30533 | 0.1397 | 218534 | 1.0 |

| HighBP: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 124818 | 0.5712 | 124818 | 0.571161 |

| "Yes" | 93716 | 0.4288 | 218534 | 1.0 |

| HighChol: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 125796 | 0.5756 | 125796 | 0.575636 |

| "Yes" | 92738 | 0.4244 | 218534 | 1.0 |

| CholCheck: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 8150 | 0.0373 | 8150 | 0.037294 |

| "Yes" | 210384 | 0.9627 | 218534 | 1.0 |

| Smoker: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 121774 | 0.5572 | 121774 | 0.557231 |

| "Yes" | 96760 | 0.4428 | 218534 | 1.0 |

| Stroke: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 209632 | 0.9593 | 209632 | 0.959265 |

| "Yes" | 8902 | 0.0407 | 218534 | 1.0 |

| HeartDiseaseorAttack: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 197971 | 0.9059 | 197971 | 0.905905 |

| "Yes" | 20563 | 0.0941 | 218534 | 1.0 |

| PhysActivity: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 53302 | 0.2439 | 53302 | 0.243907 |

| "Yes" | 165232 | 0.7561 | 218534 | 1.0 |

| Fruits: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 79866 | 0.3655 | 79866 | 0.365463 |

| "Yes" | 138668 | 0.6345 | 218534 | 1.0 |

| Veggies: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 41320 | 0.1891 | 41320 | 0.189078 |

| "Yes" | 177214 | 0.8109 | 218534 | 1.0 |

| HvyAlcoholConsump: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 206254 | 0.9438 | 206254 | 0.943807 |

| "Yes" | 12280 | 0.0562 | 218534 | 1.0 |

| AnyHealthcare: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 10705 | 0.049 | 10705 | 0.048986 |

| "Yes" | 207829 | 0.951 | 218534 | 1.0 |

| NoDocbcCost: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 200146 | 0.9159 | 200146 | 0.915857 |

| "Yes" | 18388 | 0.0841 | 218534 | 1.0 |

| GenHlth: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "5.0" | 10377 | 0.0475 | 10377 | 0.047485 |

| "Fair" | 65144 | 0.2981 | 75521 | 0.34558 |

| "Good" | 76805 | 0.3515 | 152326 | 0.697036 |

| "Poor" | 27183 | 0.1244 | 179509 | 0.821424 |

| "VeryGood" | 39025 | 0.1786 | 218534 | 1.0 |

| DiffWalk: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "No" | 181758 | 0.8317 | 181758 | 0.831715 |

| "Yes" | 36776 | 0.1683 | 218534 | 1.0 |

| Sex: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "Female" | 122388 | 0.56 | 122388 | 0.560041 |

| "Male" | 96146 | 0.44 | 218534 | 1.0 |

| Age: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "[18, 25)" | 4920 | 0.0225 | 4920 | 0.022514 |

| "[25,30)" | 6507 | 0.0298 | 11427 | 0.052289 |

| "[30,35)" | 9603 | 0.0439 | 21030 | 0.096232 |

| "[35,40)" | 11917 | 0.0545 | 32947 | 0.150764 |

| "[40,45)" | 13914 | 0.0637 | 46861 | 0.214433 |

| "[45,50)" | 17104 | 0.0783 | 63965 | 0.2927 |

| "[50,55)" | 22666 | 0.1037 | 86631 | 0.396419 |

| "[55,60)" | 26541 | 0.1215 | 113172 | 0.517869 |

| "[60,65)" | 28624 | 0.131 | 141796 | 0.648851 |

| "[65,70)" | 27711 | 0.1268 | 169507 | 0.775655 |

| "[70,75)" | 20241 | 0.0926 | 189748 | 0.868277 |

| "[75,80)" | 13811 | 0.0632 | 203559 | 0.931475 |

| "[80,inf)" | 14975 | 0.0685 | 218534 | 1.0 |

| Education: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "CollegeGraduat… | 92442 | 0.423 | 92442 | 0.42301 |

| "Elementary" | 3455 | 0.0158 | 95897 | 0.43882 |

| "HighSchool" | 54003 | 0.2471 | 149900 | 0.685934 |

| "Never" | 146 | 0.0007 | 150046 | 0.686603 |

| "SomeCollege" | 60238 | 0.2756 | 210284 | 0.962248 |

| "SomeHighSchool… | 8250 | 0.0378 | 218534 | 1.0 |

| Income: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| str | i64 | f64 | i64 | f64 |

| "[0, 10k)" | 8492 | 0.0389 | 8492 | 0.038859 |

| "[10k,15k)" | 10151 | 0.0465 | 18643 | 0.085309 |

| "[15k, 20k)" | 13775 | 0.063 | 32418 | 0.148343 |

| "[20k,25k)" | 17326 | 0.0793 | 49744 | 0.227626 |

| "[25k,35k)" | 22355 | 0.1023 | 72099 | 0.329921 |

| "[35k,50k)" | 31384 | 0.1436 | 103483 | 0.473533 |

| "[50k,75k)" | 37275 | 0.1706 | 140758 | 0.644101 |

| "[75k, inf)" | 77776 | 0.3559 | 218534 | 1.0 |

Similarly, we compute frequency tables for the quantitative variables, although this may be less informative for certain variables unless they are discretized beforehand.

for x in quant_columns:

try:

display(freq_table(X=diabetes_df[x]))

except:

print(f'Couldnt be computed for {x}.')

| BMI: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| f64 | i64 | f64 | i64 | f64 |

| 12.0 | 6 | 0.0 | 6 | 0.000024 |

| 13.0 | 21 | 0.0001 | 27 | 0.000106 |

| 14.0 | 41 | 0.0002 | 68 | 0.000268 |

| 15.0 | 132 | 0.0005 | 200 | 0.000788 |

| 16.0 | 348 | 0.0014 | 548 | 0.00216 |

| 17.0 | 776 | 0.0031 | 1324 | 0.005219 |

| 18.0 | 1803 | 0.0071 | 3127 | 0.012327 |

| 19.0 | 3968 | 0.0156 | 7095 | 0.027968 |

| 20.0 | 6327 | 0.0249 | 13422 | 0.052909 |

| 21.0 | 9855 | 0.0388 | 23277 | 0.091757 |

| 22.0 | 13643 | 0.0538 | 36920 | 0.145538 |

| 23.0 | 15610 | 0.0615 | 52530 | 0.207072 |

| … | … | … | … | … |

| 84.0 | 44 | 0.0002 | 253533 | 0.999421 |

| 85.0 | 1 | 0.0 | 253534 | 0.999424 |

| 86.0 | 1 | 0.0 | 253535 | 0.999428 |

| 87.0 | 61 | 0.0002 | 253596 | 0.999669 |

| 88.0 | 2 | 0.0 | 253598 | 0.999677 |

| 89.0 | 28 | 0.0001 | 253626 | 0.999787 |

| 90.0 | 1 | 0.0 | 253627 | 0.999791 |

| 91.0 | 1 | 0.0 | 253628 | 0.999795 |

| 92.0 | 32 | 0.0001 | 253660 | 0.999921 |

| 95.0 | 12 | 0.0 | 253672 | 0.999968 |

| 96.0 | 1 | 0.0 | 253673 | 0.999972 |

| 98.0 | 7 | 0.0 | 253680 | 1.0 |

| MentHlth: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| f64 | i64 | f64 | i64 | f64 |

| 0.0 | 170458 | 0.6719 | 170458 | 0.671941 |

| 1.0 | 8283 | 0.0327 | 178741 | 0.704592 |

| 2.0 | 12668 | 0.0499 | 191409 | 0.754529 |

| 3.0 | 7153 | 0.0282 | 198562 | 0.782726 |

| 4.0 | 3693 | 0.0146 | 202255 | 0.797284 |

| 5.0 | 8751 | 0.0345 | 211006 | 0.83178 |

| 6.0 | 967 | 0.0038 | 211973 | 0.835592 |

| 7.0 | 3001 | 0.0118 | 214974 | 0.847422 |

| 8.0 | 617 | 0.0024 | 215591 | 0.849854 |

| 9.0 | 84 | 0.0003 | 215675 | 0.850185 |

| 10.0 | 6188 | 0.0244 | 221863 | 0.874578 |

| 11.0 | 40 | 0.0002 | 221903 | 0.874736 |

| … | … | … | … | … |

| 20.0 | 3277 | 0.0129 | 232330 | 0.915839 |

| 21.0 | 220 | 0.0009 | 232550 | 0.916706 |

| 22.0 | 62 | 0.0002 | 232612 | 0.91695 |

| 23.0 | 37 | 0.0001 | 232649 | 0.917096 |

| 24.0 | 32 | 0.0001 | 232681 | 0.917222 |

| 25.0 | 1142 | 0.0045 | 233823 | 0.921724 |

| 26.0 | 44 | 0.0002 | 233867 | 0.921898 |

| 27.0 | 78 | 0.0003 | 233945 | 0.922205 |

| 28.0 | 320 | 0.0013 | 234265 | 0.923467 |

| 29.0 | 151 | 0.0006 | 234416 | 0.924062 |

| 30.0 | 11736 | 0.0463 | 246152 | 0.970325 |

| NaN | 7528 | 0.0297 | 253680 | 1.0 |

| PhysHlth: unique values | abs_freq | rel_freq | cum_abs_freq | cum_rel_freq |

|---|---|---|---|---|

| f64 | i64 | f64 | i64 | f64 |

| 0.0 | 155770 | 0.614 | 155770 | 0.614041 |

| 1.0 | 11092 | 0.0437 | 166862 | 0.657766 |

| 2.0 | 14361 | 0.0566 | 181223 | 0.714376 |

| 3.0 | 8271 | 0.0326 | 189494 | 0.74698 |

| 4.0 | 4422 | 0.0174 | 193916 | 0.764412 |

| 5.0 | 7438 | 0.0293 | 201354 | 0.793732 |

| 6.0 | 1294 | 0.0051 | 202648 | 0.798833 |

| 7.0 | 4416 | 0.0174 | 207064 | 0.816241 |

| 8.0 | 788 | 0.0031 | 207852 | 0.819347 |

| 9.0 | 173 | 0.0007 | 208025 | 0.820029 |

| 10.0 | 5469 | 0.0216 | 213494 | 0.841588 |

| 11.0 | 58 | 0.0002 | 213552 | 0.841816 |

| … | … | … | … | … |

| 20.0 | 3198 | 0.0126 | 225018 | 0.887015 |

| 21.0 | 650 | 0.0026 | 225668 | 0.889577 |

| 22.0 | 70 | 0.0003 | 225738 | 0.889853 |

| 23.0 | 54 | 0.0002 | 225792 | 0.890066 |

| 24.0 | 66 | 0.0003 | 225858 | 0.890326 |

| 25.0 | 1299 | 0.0051 | 227157 | 0.895447 |

| 26.0 | 68 | 0.0003 | 227225 | 0.895715 |

| 27.0 | 97 | 0.0004 | 227322 | 0.896097 |

| 28.0 | 509 | 0.002 | 227831 | 0.898104 |

| 29.0 | 209 | 0.0008 | 228040 | 0.898928 |

| 30.0 | 18901 | 0.0745 | 246941 | 0.973435 |

| NaN | 6739 | 0.0266 | 253680 | 1.0 |

Visualization#

We will now proceed to conduct a comprehensive visualization of our dataset in this section.

Histogram matrix#

We compute an histogram for each quantitative variable.

histogram_matrix(df=diabetes_df, bins=20, n_cols=3, title='Histogram Matrix - Quantitative variables', title_fontsize=15, subtitles_fontsize=12,

n_xticks=8, figsize=(17,4), auto_col=False, quant_col_names=quant_columns, title_height=1.01)

Each histogram represents the distribution of respondents’ reported values for these health indicators.

The BMI histogram shows a right-skewed distribution, suggesting that a larger number of respondents have a BMI in the lower range, with fewer individuals having higher BMI values.

The Mental Health histogram is highly skewed to the left, indicating most respondents report lower scores (presumably representing fewer mental health issues), with a small proportion reporting higher scores.

The Physical Health histogram exhibits a similar left skewness to the Mental Health histogram, with the majority of responses clustering at the lower end of the scale (indicating better physical health) and fewer responses indicating worse physical health.

Overall, these histograms suggest that the majority of the survey’s respondents report better mental and physical health, with body mass index showing a more varied distribution.

Boxplot matrix#

We compute an boxplot for each quantitative variable.

boxplot_matrix(df=diabetes_df, n_cols=3, title='Boxplot Matrix - Quantitative variables', title_fontsize=15, subtitles_fontsize=12,

n_xticks=7, figsize=(13,3), auto_col=False, quant_col_names=quant_columns, title_height=1.05,

statistics=['median', 'mean'], color_stats=['green', 'red'],

lines_width=1.2, legend_size=9, bbox_to_anchor=(0.5, -0.2))

The last two variables haven’t lines with the median and mean because have missing values.

diabetes_df_non_quant_NaNs = diabetes_df.drop_nulls(subset=quant_columns)

prop_cols_nulls(diabetes_df_non_quant_NaNs)

| Diabetes_binary | HighBP | HighChol | CholCheck | BMI | Smoker | Stroke | HeartDiseaseorAttack | PhysActivity | Fruits | Veggies | HvyAlcoholConsump | AnyHealthcare | NoDocbcCost | GenHlth | MentHlth | PhysHlth | DiffWalk | Sex | Age | Education | Income |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 | f64 |

| 0.0 | 0.0 | 0.0 | 0.023362 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.067201 | 0.0 | 0.0 | 0.001369 | 0.0 | 0.0 | 0.0 | 0.0 | 0.011334 | 0.041928 | 0.0 | 0.0 |

boxplot_matrix(df=diabetes_df_non_quant_NaNs, n_cols=3, title='Boxplot Matrix - Quantitative variables', title_fontsize=15, subtitles_fontsize=12,

n_xticks=7, figsize=(13,3), auto_col=False, quant_col_names=quant_columns, title_height=1.05,

statistics=['median', 'mean'], color_stats=['green', 'red'],

lines_width=1.2, legend_size=9, bbox_to_anchor=(0.5, -0.2))

After the data transformation to remove rows with null values in the quantitative variables, the boxplot matrix shows the following:

For BMI, the distribution remains wide with several high-value outliers, indicating a varied body weight distribution. The median is lower than the mean, suggesting a right-skewed distribution.

Mental Health and Physical Health both show a concentration of values at the lower end of the scale, with the median and mean closely aligned. This suggests that most people report fewer issues, but there is a tail of respondents reporting higher values.

The presence of outliers is still noticeable in all three health indicators, implying that while most values cluster around a central point, there are individuals with significantly different reported outcomes.

The absence of median and mean lines for the last two variables in the previous plot indicates the persisting presence of missing values.

ECDFplot matrix#

ecdf_matrix(df=diabetes_df, n_cols=3, title='ECDFplot Matrix - Quantitative variables', title_fontsize=15, subtitles_fontsize=13,

n_xticks=7, figsize=(13,4), auto_col=False, quant_col_names=quant_columns, title_height=1.01)

The BMI ECDF suggests a rapid accumulation of values in the lower range, with the curve flattening as it approaches higher BMI values. This indicates that a large proportion of the population has a BMI within a lower range.

The Mental Health ECDF shows a more gradual slope, suggesting a more even distribution of responses across the range, but still, the majority have lower scores.

The Physical Health ECDF appears to have a similar shape to the Mental Health curve, indicating a similar distribution pattern of responses.

Barplot matrix#

For showing the distribution of the variables, specially the binary ones.

barplot_matrix(diabetes_df, n_cols=3, title='Barplot Matrix - Categorical variables',

figsize=(15,20), auto_col=False, cat_col_list=cat_columns, title_height=0.92,

hspace=1, wspace=0.2, x_rotation=45, title_fontsize=15, subtitles_fontsize=13, n_yticks=3)

"Diabetes_binary"shows the distribution between individuals with and without diabetes, indicating the proportion affected by the condition."HighBP"reveals how many individuals report having high blood pressure, while"HighChol"does the same for high cholesterol, both critical factors in diabetes risk."CholCheck"indicates high compliance with cholesterol monitoring among the survey participants, suggesting awareness or prevalence of cholesterol issues."Smoker"shows a considerable number of individuals identify as smokers, a risk factor for many health issues including diabetes."Stroke"gives an indication of the prevalence of stroke among the respondents.Lifestyle habits are captured in

"PhysActivity","Fruits", and"Veggies", displaying the proportion of individuals who are physically active and those who consume fruits and vegetables regularly."HvyAlcoholConsump"shows how many participants consume alcohol heavily, which can impact health."AnyHealthcare"reflects on the participants’ access to healthcare, which is a crucial factor for managing chronic diseases."NoDocbcCost"suggests economic barriers to healthcare, as it shows the proportion of people who have foregone medical care due to cost."GenHlth"represents the participants’ self-assessed health status, with categories ranging from excellent to poor."DiffWalk"highlights the number of individuals with difficulties in walking, which may relate to physical health status or disabilities.The

"Sex"distribution graph indicates the relative proportions of female and male participants."Age"shows a declining number of participants with increasing age, while"Education"indicates the distribution of educational attainment levels across the sample.Finally,

"Income"illustrates the financial distribution among the survey participants, providing insight into the economic diversity of the sample.

Each bar graph provides insight into the frequency and distribution of each category, which is vital for understanding factors associated with diabetes within the population.

Response vs Predictors Analysis#

First, we start differentiating between the response and the predictors.

response = 'Diabetes_binary'

predictors = [col for col in diabetes_df.columns if col != response]

quant_predictors = [col for col in predictors if col in quant_columns]

cat_predictors = [col for col in predictors if col in cat_columns]

Response vs Quantitative Predictors#

Now let’s compare the distribution of the quantitative predictor variables against the binary response variable for diabetes Diabetes_binary.

boxplot_2D_matrix(df=diabetes_df, n_cols=3, tittle='Diabetes vs Quantitative Predictors', figsize=(15,4),

cat_col_names=[response], quant_col_names=quant_predictors,

n_yticks=5, title_height=1.02, title_fontsize=14, subtitles_fontsize=11,

statistics=['mean'], lines_width=0.8, bbox_to_anchor=(0.53, -0.15),

legend_size=9, color_stats=['red'], showfliers=True,

hspace=0.5, wspace=0.3, x_rotation=45, auto_col=False)

For BMI, individuals with diabetes (

"yes") show a higher mean BMI (indicated by the red dashed line) than those without diabetes ("no"), and the distribution has a larger spread, suggesting a correlation between higher BMI and diabetes presence.The Mental Health (

MentHlth) boxplot indicates a slightly higher mean for those with diabetes compared to those without, but the difference is not as pronounced as with BMI.Physical Health (

PhysHlth) shows a notably higher mean for diabetic individuals, implying that those with diabetes might have worse physical health than those without.

Cross quant-cat descriptive summary#

This step in the code is generating and displaying a cross-tabulation summary between each quantitative predictor and the response variable.

for col in quant_predictors:

print('---------------------------------------------------------------------------------')

print(col)

display(cross_quant_cat_summary(df=diabetes_df, cat_col=response, quant_col=col))

---------------------------------------------------------------------------------

BMI

| Diabetes_binary | prop_BMI | mean_BMI | std_BMI | min_BMI | Q10_BMI | Q25_BMI | median_BMI | Q75_BMI | Q90_BMI | max_price | kurtosis_BMI | skew_BMI | prop_outliers_BMI | prop_nan_BMI |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| object | object | object | object | object | object | object | object | object | object | object | object | object | f64 | f64 |

| Yes | 0.139 | 31.944 | 7.363 | 13.0 | 24.0 | 27.0 | 31.0 | 35.0 | 41.0 | 98.0 | 5.716 | 1.527 | 0.029679 | 0.0 |

| No | 0.861 | 27.806 | 6.291 | 12.0 | 21.0 | 24.0 | 27.0 | 31.0 | 35.0 | 98.0 | 13.617 | 2.331 | 0.034035 | 0.0 |

---------------------------------------------------------------------------------

MentHlth

| Diabetes_binary | prop_MentHlth | mean_MentHlth | std_MentHlth | min_MentHlth | Q10_MentHlth | Q25_MentHlth | median_MentHlth | Q75_MentHlth | Q90_MentHlth | max_price | kurtosis_MentHlth | skew_MentHlth | prop_outliers_MentHlth | prop_nan_MentHlth |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| object | object | object | object | object | object | object | object | object | object | object | object | object | f64 | f64 |

| No | 0.835 | 2.979 | 7.113 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 | 10.0 | 30.0 | 7.364 | 2.862 | 0.129906 | 0.02956 |

| Yes | 0.135 | 4.467 | 8.952 | 0.0 | 0.0 | 0.0 | 0.0 | 3.0 | 20.0 | 30.0 | 2.864 | 2.063 | 0.176342 | 0.030385 |

---------------------------------------------------------------------------------

PhysHlth

| Diabetes_binary | prop_PhysHlth | mean_PhysHlth | std_PhysHlth | min_PhysHlth | Q10_PhysHlth | Q25_PhysHlth | median_PhysHlth | Q75_PhysHlth | Q90_PhysHlth | max_price | kurtosis_PhysHlth | skew_PhysHlth | prop_outliers_PhysHlth | prop_nan_PhysHlth |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| object | object | object | object | object | object | object | object | object | object | object | object | object | f64 | f64 |

| No | 0.838 | 3.644 | 8.069 | 0.0 | 0.0 | 0.0 | 0.0 | 2.0 | 15.0 | 30.0 | 4.993 | 2.495 | 0.0 | 0.026764 |

| Yes | 0.136 | 7.95 | 11.297 | 0.0 | 0.0 | 0.0 | 1.0 | 15.0 | 30.0 | 30.0 | -0.338 | 1.151 | 0.155212 | 0.026533 |

Response vs Categorical Predictors#

Conditional contingence table#

for col in cat_predictors:

try:

display(contingency_table_2D(diabetes_df, cat1_name=response, cat2_name=col,

conditional=True, axis=0))

except:

print('-------------------------------')

print(f'Computation failed for {col}')

print('-------------------------------')

| (HighBP | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 82225 | 0.3766 |

| ["No", "No"] | 136109 | 0.6234 |

| ["Yes", "Yes"] | 26604 | 0.7527 |

| ["No", "Yes"] | 8742 | 0.2473 |

| (HighChol | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 83905 | 0.3843 |

| ["No", "No"] | 134429 | 0.6157 |

| ["Yes", "Yes"] | 23686 | 0.6701 |

| ["No", "Yes"] | 11660 | 0.3299 |

| (CholCheck | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 204181 | 0.9352 |

| ["No", "No"] | 9009 | 0.0413 |

| [null, "No"] | 5144 | 0.0236 |

| ["Yes", "Yes"] | 34326 | 0.9711 |

| [null, "Yes"] | 785 | 0.0222 |

| ["No", "Yes"] | 235 | 0.0066 |

| (Smoker | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 94106 | 0.431 |

| ["No", "No"] | 124228 | 0.569 |

| ["Yes", "Yes"] | 18317 | 0.5182 |

| ["No", "Yes"] | 17029 | 0.4818 |

| (Stroke | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 211310 | 0.9678 |

| ["Yes", "No"] | 7024 | 0.0322 |

| ["No", "Yes"] | 32078 | 0.9075 |

| ["Yes", "Yes"] | 3268 | 0.0925 |

| (HeartDiseaseorAttack | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 202319 | 0.9266 |

| ["Yes", "No"] | 16015 | 0.0734 |

| ["Yes", "Yes"] | 7878 | 0.2229 |

| ["No", "Yes"] | 27468 | 0.7771 |

| (PhysActivity | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 48701 | 0.2231 |

| ["Yes", "No"] | 169633 | 0.7769 |

| ["No", "Yes"] | 13059 | 0.3695 |

| ["Yes", "Yes"] | 22287 | 0.6305 |

| (Fruits | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 78129 | 0.3578 |

| ["Yes", "No"] | 140205 | 0.6422 |

| ["Yes", "Yes"] | 20693 | 0.5854 |

| ["No", "Yes"] | 14653 | 0.4146 |

| (Veggies | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 166980 | 0.7648 |

| ["No", "No"] | 36635 | 0.1678 |

| [null, "No"] | 14719 | 0.0674 |

| ["Yes", "Yes"] | 24923 | 0.7051 |

| ["No", "Yes"] | 8041 | 0.2275 |

| [null, "Yes"] | 2382 | 0.0674 |

| (HvyAlcoholConsump | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 204910 | 0.9385 |

| ["Yes", "No"] | 13424 | 0.0615 |

| ["No", "Yes"] | 34514 | 0.9765 |

| ["Yes", "Yes"] | 832 | 0.0235 |

| (AnyHealthcare | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 207339 | 0.9496 |

| ["No", "No"] | 10995 | 0.0504 |

| ["Yes", "Yes"] | 33924 | 0.9598 |

| ["No", "Yes"] | 1422 | 0.0402 |

| (NoDocbcCost | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["No", "No"] | 200454 | 0.9181 |

| ["Yes", "No"] | 17584 | 0.0805 |

| [null, "No"] | 296 | 0.0014 |

| ["No", "Yes"] | 31567 | 0.8931 |

| ["Yes", "Yes"] | 3736 | 0.1057 |

| [null, "Yes"] | 43 | 0.0012 |

| (GenHlth | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["5.0", "No"] | 7503 | 0.0344 |

| ["Fair", "No"] | 62189 | 0.2848 |

| ["Good", "No"] | 82703 | 0.3788 |

| ["Poor", "No"] | 21780 | 0.0998 |

| ["VeryGood", "No"] | 44159 | 0.2023 |

| ["5.0", "Yes"] | 4578 | 0.1295 |

| ["Fair", "Yes"] | 13457 | 0.3807 |

| ["Poor", "Yes"] | 9790 | 0.277 |

| ["Good", "Yes"] | 6381 | 0.1805 |

| ["VeryGood", "Yes"] | 1140 | 0.0323 |

| (DiffWalk | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Yes", "No"] | 29554 | 0.1354 |

| ["No", "No"] | 188780 | 0.8646 |

| ["Yes", "Yes"] | 13121 | 0.3712 |

| ["No", "Yes"] | 22225 | 0.6288 |

| (Sex | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["Female", "No"] | 122166 | 0.5595 |

| [null, "No"] | 2489 | 0.0114 |

| ["Male", "No"] | 93679 | 0.4291 |

| ["Female", "Yes"] | 18214 | 0.5153 |

| ["Male", "Yes"] | 16745 | 0.4737 |

| [null, "Yes"] | 387 | 0.0109 |

| (Age | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["[60,65)", "No"] | 26340 | 0.1206 |

| ["[50,55)", "No"] | 22253 | 0.1019 |

| ["[70,75)", "No"] | 17624 | 0.0807 |

| ["[65,70)", "No"] | 24537 | 0.1124 |

| ["[55,60)", "No"] | 25478 | 0.1167 |

| ["[35,40)", "No"] | 12655 | 0.058 |

| ["[45,50)", "No"] | 17323 | 0.0793 |

| [null, "No"] | 9159 | 0.0419 |

| ["[75,80)", "No"] | 12064 | 0.0553 |

| ["[80,inf)", "No"] | 13524 | 0.0619 |

| ["[40,45)", "No"] | 14508 | 0.0664 |

| ["[18, 25)", "No"] | 5378 | 0.0246 |

| … | … | … |

| ["[70,75)", "Yes"] | 4925 | 0.1393 |

| ["[50,55)", "Yes"] | 2956 | 0.0836 |

| ["[65,70)", "Yes"] | 6287 | 0.1779 |

| ["[75,80)", "Yes"] | 3258 | 0.0922 |

| [null, "Yes"] | 1465 | 0.0414 |

| ["[55,60)", "Yes"] | 4098 | 0.1159 |

| ["[30,35)", "Yes"] | 295 | 0.0083 |

| ["[45,50)", "Yes"] | 1677 | 0.0474 |

| ["[35,40)", "Yes"] | 596 | 0.0169 |

| ["[40,45)", "Yes"] | 1004 | 0.0284 |

| ["[25,30)", "Yes"] | 139 | 0.0039 |

| ["[18, 25)", "Yes"] | 77 | 0.0022 |

| (Education | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["HighSchool", "No"] | 51684 | 0.2367 |

| ["CollegeGraduate", "No"] | 96925 | 0.4439 |

| ["SomeHighSchool", "No"] | 7182 | 0.0329 |

| ["SomeCollege", "No"] | 59556 | 0.2728 |

| ["Elementary", "No"] | 2860 | 0.0131 |

| ["Never", "No"] | 127 | 0.0006 |

| ["SomeCollege", "Yes"] | 10354 | 0.2929 |

| ["CollegeGraduate", "Yes"] | 10400 | 0.2942 |

| ["HighSchool", "Yes"] | 11066 | 0.3131 |

| ["Elementary", "Yes"] | 1183 | 0.0335 |

| ["SomeHighSchool", "Yes"] | 2296 | 0.065 |

| ["Never", "Yes"] | 47 | 0.0013 |

| (Income | Diabetes_binary) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i64 | f64 |

| ["[15k, 20k)", "No"] | 12426 | 0.0569 |

| ["[0, 10k)", "No"] | 7428 | 0.034 |

| ["[75k, inf)", "No"] | 83190 | 0.381 |

| ["[35k,50k)", "No"] | 31179 | 0.1428 |

| ["[20k,25k)", "No"] | 16081 | 0.0737 |

| ["[50k,75k)", "No"] | 37954 | 0.1738 |

| ["[10k,15k)", "No"] | 8697 | 0.0398 |

| ["[25k,35k)", "No"] | 21379 | 0.0979 |

| ["[0, 10k)", "Yes"] | 2383 | 0.0674 |

| ["[75k, inf)", "Yes"] | 7195 | 0.2036 |

| ["[35k,50k)", "Yes"] | 5291 | 0.1497 |

| ["[20k,25k)", "Yes"] | 4054 | 0.1147 |

| ["[25k,35k)", "Yes"] | 4504 | 0.1274 |

| ["[50k,75k)", "Yes"] | 5265 | 0.149 |

| ["[15k, 20k)", "Yes"] | 3568 | 0.1009 |

| ["[10k,15k)", "Yes"] | 3086 | 0.0873 |

for col in cat_predictors:

try:

display(contingency_table_2D(diabetes_df_non_cat_NaNs, cat1_name=response, cat2_name=col,

conditional=True, axis=1))

except:

print('-------------------------------')

print(f'Computation failed for {col}')

print('-------------------------------')

| (Diabetes_binary | HighBP) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 117270 | 0.9395 |

| ["Yes", "No"] | 7548 | 0.0605 |

| ["No", "Yes"] | 70731 | 0.7547 |

| ["Yes", "Yes"] | 22985 | 0.2453 |

| (Diabetes_binary | HighChol) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 115719 | 0.9199 |

| ["Yes", "No"] | 10077 | 0.0801 |

| ["No", "Yes"] | 72282 | 0.7794 |

| ["Yes", "Yes"] | 20456 | 0.2206 |

| (Diabetes_binary | CholCheck) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 7940 | 0.9742 |

| ["Yes", "No"] | 210 | 0.0258 |

| ["No", "Yes"] | 180061 | 0.8559 |

| ["Yes", "Yes"] | 30323 | 0.1441 |

| (Diabetes_binary | Smoker) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 107039 | 0.879 |

| ["Yes", "No"] | 14735 | 0.121 |

| ["No", "Yes"] | 80962 | 0.8367 |

| ["Yes", "Yes"] | 15798 | 0.1633 |

| (Diabetes_binary | Stroke) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 181918 | 0.8678 |

| ["Yes", "No"] | 27714 | 0.1322 |

| ["No", "Yes"] | 6083 | 0.6833 |

| ["Yes", "Yes"] | 2819 | 0.3167 |

| (Diabetes_binary | HeartDiseaseorAttack) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 174260 | 0.8802 |

| ["Yes", "No"] | 23711 | 0.1198 |

| ["Yes", "Yes"] | 6822 | 0.3318 |

| ["No", "Yes"] | 13741 | 0.6682 |

| (Diabetes_binary | PhysActivity) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 42008 | 0.7881 |

| ["Yes", "No"] | 11294 | 0.2119 |

| ["No", "Yes"] | 145993 | 0.8836 |

| ["Yes", "Yes"] | 19239 | 0.1164 |

| (Diabetes_binary | Fruits) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 67237 | 0.8419 |

| ["Yes", "No"] | 12629 | 0.1581 |

| ["No", "Yes"] | 120764 | 0.8709 |

| ["Yes", "Yes"] | 17904 | 0.1291 |

| (Diabetes_binary | Veggies) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 33904 | 0.8205 |

| ["Yes", "No"] | 7416 | 0.1795 |

| ["No", "Yes"] | 154097 | 0.8696 |

| ["Yes", "Yes"] | 23117 | 0.1304 |

| (Diabetes_binary | HvyAlcoholConsump) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 176441 | 0.8555 |

| ["Yes", "No"] | 29813 | 0.1445 |

| ["No", "Yes"] | 11560 | 0.9414 |

| ["Yes", "Yes"] | 720 | 0.0586 |

| (Diabetes_binary | AnyHealthcare) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 9477 | 0.8853 |

| ["Yes", "No"] | 1228 | 0.1147 |

| ["No", "Yes"] | 178524 | 0.859 |

| ["Yes", "Yes"] | 29305 | 0.141 |

| (Diabetes_binary | NoDocbcCost) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 172849 | 0.8636 |

| ["Yes", "No"] | 27297 | 0.1364 |

| ["No", "Yes"] | 15152 | 0.824 |

| ["Yes", "Yes"] | 3236 | 0.176 |

| (Diabetes_binary | GenHlth) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "5.0"] | 6447 | 0.6213 |

| ["Yes", "5.0"] | 3930 | 0.3787 |

| ["No", "Fair"] | 53548 | 0.822 |

| ["Yes", "Fair"] | 11596 | 0.178 |

| ["No", "Good"] | 71286 | 0.9281 |

| ["Yes", "Good"] | 5519 | 0.0719 |

| ["Yes", "Poor"] | 8503 | 0.3128 |

| ["No", "Poor"] | 18680 | 0.6872 |

| ["Yes", "VeryGood"] | 985 | 0.0252 |

| ["No", "VeryGood"] | 38040 | 0.9748 |

| (Diabetes_binary | DiffWalk) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "No"] | 162551 | 0.8943 |

| ["Yes", "No"] | 19207 | 0.1057 |

| ["No", "Yes"] | 25450 | 0.692 |

| ["Yes", "Yes"] | 11326 | 0.308 |

| (Diabetes_binary | Sex) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "Female"] | 106426 | 0.8696 |

| ["Yes", "Female"] | 15962 | 0.1304 |

| ["No", "Male"] | 81575 | 0.8484 |

| ["Yes", "Male"] | 14571 | 0.1516 |

| (Diabetes_binary | Age) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "[18, 25)"] | 4851 | 0.986 |

| ["Yes", "[18, 25)"] | 69 | 0.014 |

| ["No", "[25,30)"] | 6377 | 0.98 |

| ["Yes", "[25,30)"] | 130 | 0.02 |

| ["No", "[30,35)"] | 9338 | 0.9724 |

| ["Yes", "[30,35)"] | 265 | 0.0276 |

| ["No", "[35,40)"] | 11380 | 0.9549 |

| ["Yes", "[35,40)"] | 537 | 0.0451 |

| ["No", "[40,45)"] | 13010 | 0.935 |

| ["Yes", "[40,45)"] | 904 | 0.065 |

| ["No", "[45,50)"] | 15586 | 0.9112 |

| ["Yes", "[45,50)"] | 1518 | 0.0888 |

| … | … | … |

| ["No", "[55,60)"] | 22830 | 0.8602 |

| ["Yes", "[55,60)"] | 3711 | 0.1398 |

| ["No", "[60,65)"] | 23707 | 0.8282 |

| ["Yes", "[60,65)"] | 4917 | 0.1718 |

| ["No", "[65,70)"] | 22036 | 0.7952 |

| ["Yes", "[65,70)"] | 5675 | 0.2048 |

| ["No", "[70,75)"] | 15819 | 0.7815 |

| ["Yes", "[70,75)"] | 4422 | 0.2185 |

| ["No", "[75,80)"] | 10864 | 0.7866 |

| ["Yes", "[75,80)"] | 2947 | 0.2134 |

| ["Yes", "[80,inf)"] | 2794 | 0.1866 |

| ["No", "[80,inf)"] | 12181 | 0.8134 |

| (Diabetes_binary | Education) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "CollegeGraduate"] | 83433 | 0.9025 |

| ["Yes", "CollegeGraduate"] | 9009 | 0.0975 |

| ["Yes", "Elementary"] | 1020 | 0.2952 |

| ["No", "Elementary"] | 2435 | 0.7048 |

| ["No", "HighSchool"] | 44446 | 0.823 |

| ["Yes", "HighSchool"] | 9557 | 0.177 |

| ["Yes", "Never"] | 37 | 0.2534 |

| ["No", "Never"] | 109 | 0.7466 |

| ["No", "SomeCollege"] | 51308 | 0.8518 |

| ["Yes", "SomeCollege"] | 8930 | 0.1482 |

| ["No", "SomeHighSchool"] | 6270 | 0.76 |

| ["Yes", "SomeHighSchool"] | 1980 | 0.24 |

| (Diabetes_binary | Income) : unique values | abs_freq | rel_freq |

|---|---|---|

| list[str] | i32 | f64 |

| ["No", "[0, 10k)"] | 6431 | 0.7573 |

| ["Yes", "[0, 10k)"] | 2061 | 0.2427 |

| ["No", "[10k,15k)"] | 7479 | 0.7368 |

| ["Yes", "[10k,15k)"] | 2672 | 0.2632 |

| ["No", "[15k, 20k)"] | 10695 | 0.7764 |

| ["Yes", "[15k, 20k)"] | 3080 | 0.2236 |

| ["No", "[20k,25k)"] | 13792 | 0.796 |

| ["Yes", "[20k,25k)"] | 3534 | 0.204 |

| ["No", "[25k,35k)"] | 18457 | 0.8256 |

| ["Yes", "[25k,35k)"] | 3898 | 0.1744 |

| ["No", "[35k,50k)"] | 26838 | 0.8551 |

| ["Yes", "[35k,50k)"] | 4546 | 0.1449 |

| ["No", "[50k,75k)"] | 32756 | 0.8788 |

| ["Yes", "[50k,75k)"] | 4519 | 0.1212 |

| ["No", "[75k, inf)"] | 71553 | 0.92 |

| ["Yes", "[75k, inf)"] | 6223 | 0.08 |

Visualization of conditional contingence table#

In this section, we will graphically represent the information from the previous tables to facilitate the extraction of insights.

def response_conditioned_barplot(df, cat_predictor, response, n_rows, figsize, title_size, subtitles_size, title_height,

xlabel_size=10, xticks_size=9, hspace=1, wspace=0.5, palette='tab10', ylabel_size=11):

cond_prop_response = {cat_predictor: {}}

for cat in df[cat_predictor].unique():

Y_cond = df.filter(pl.col(cat_predictor) == cat)[response].to_numpy()

unique_values, counts = np.unique(Y_cond, return_counts=True)

prop = np.round(counts / len(Y_cond), 3)

cond_prop_response[cat_predictor][cat] = dict(zip(unique_values, prop))

n_categories = len(cond_prop_response[cat_predictor].keys())

n_cols = int(np.ceil(n_categories / n_rows))

fig, axs = plt.subplots(n_rows, n_cols, figsize=figsize)

axes = axs.flatten()

colors = sns.color_palette(palette, 20)

for i, cat in enumerate(cond_prop_response[cat_predictor]):

ax = sns.barplot(x=list(cond_prop_response[cat_predictor][cat].keys()),

y=list(cond_prop_response[cat_predictor][cat].values()),

color=colors[i], alpha=1, width=0.4, ax=axes[i])

axes[i].set_title(cat, fontsize=subtitles_size)

for i in range(0, len(axes)):

axes[i].set_ylabel('')

axes[i].set_xlabel(response, size=xlabel_size)

axes[i].tick_params(axis='x', rotation=0, labelsize=xticks_size)

axes[i].set_yticks(np.arange(0,1.2, 0.2))

axes[0].set_ylabel('Proportion', size=ylabel_size)

plt.suptitle(f'{response} | {cat_predictor}', size=title_size, weight='bold', y=title_height)

plt.subplots_adjust(hspace=hspace, wspace=wspace)

for j in range(n_categories, n_rows * n_cols):

fig.delaxes(axes[j])

plt.show()

n_unique = {}

for col in cat_predictors :

n_unique[col] = len(diabetes_df[col].unique())

for col in cat_predictors:

if n_unique[col] <= 3:

n_rows = 1

figsize = (8,3)

title_height = 1.05

elif n_unique[col] > 4 and n_unique[col] <= 8:

n_rows = 2

figsize = (8,4.5)

title_height = 1.02

else:

n_rows = 3

figsize = (10,7)

title_height = 0.99

response_conditioned_barplot(df=diabetes_df_non_cat_NaNs, cat_predictor=col, response=response, n_rows=n_rows,

figsize=figsize, title_size=12, subtitles_size=11, title_height=title_height,

xlabel_size=10, xticks_size=9, hspace=0.7, wspace=0.3, palette='tab10')

These plots help to see the relation of some variables that, in principle, should be related. We are focusing specially in the relation of the response variable with other ones, this analysis could help us in the upcoming parts of the project.

In these last graphs we can see the different relationships that exist between some predictor variables and the response variable. Looking at the distribution of the binary variable in each case we can notice differences. Nor can these relationships be taken literally because some may be due to chance and others to causality.

In the case of age, if it has been proven in other studies that it is a risk factor, there is a greater proportion of cases of diabetes at certain ages, in this case the most notorious would be the highest. Then there are others that are related to this as the state of health, with age your health worsens and these ailments usually indicate a greater presence of diabetes.

In the case of income, to highlight some, there is perhaps no significant change, but there seems to be a higher proportion in people with lower income, this may be due to a poorer quality of food, higher amount of processed foods and higher consumption of sugar because of its lower cost. This is a relationship that should be reviewed if the change is really significant, possibly a test will help us to find out.

A lot of information can be obtained from these relationships, but as I said before, many times they can be due to coincidences that are not too significant. But it is worth bearing them in mind to study them more closely.

More key insights from the plots include:

A higher proportion of individuals with high blood pressure (

"HighBP") and high cholesterol ("HighChol") are diabetic compared to non-diabetic.A substantial majority of both diabetic and non-diabetic individuals have had cholesterol checks (

"CholCheck"), but the proportion is slightly higher for diabetics.Smoking (

"Smoker") is more prevalent among diabetics than non-diabetics.Stroke (

"Stroke") and heart disease or attack ("HeartDiseaseorAttack") occurrences are notably higher in diabetics.Diabetics are less likely to engage in physical activity (

"PhysActivity") than non-diabetics.Diabetics have a lower consumption of fruits (

"Fruits") and vegetables ("Veggies"), and lower heavy alcohol consumption ("HvyAlcoholConsump").Most individuals, regardless of diabetes status, have some form of healthcare (

"AnyHealthcare"), but the proportion is marginally higher in diabetics.Diabetics are more likely to report cost as a barrier to seeing a doctor (

"NoDocbcCost").General health (

"GenHlth") ratings are poorer among diabetics, with higher frequencies ofFairandPoorratings.Difficulty walking (

"DiffWalk") is more frequently reported by diabetics.Gender (

"Sex") shows a balanced distribution across diabetes status, with a slight female preponderance.Age shows an increasing trend of diabetes prevalence with advancing age.

Education and income show varied distribution, with a tendency towards lower education and income levels among diabetics.

Intro to Machine Learning with Sklearn#

Defining the response and predictors#

quant_columns = [col for col in diabetes_df.columns if diabetes_df[col].dtype == pl.Float64]

cat_columns = [col for col in diabetes_df.columns if diabetes_df[col].dtype == pl.Utf8]

response = 'Diabetes_binary'

predictors = [col for col in diabetes_df.columns if col != response]

quant_predictors = [col for col in predictors if col in quant_columns]

cat_predictors = [col for col in predictors if col in cat_columns]

Y = diabetes_df[response].to_pandas()

X = diabetes_df[predictors].to_pandas()

Y

0 No

1 No

2 No

3 No

4 No

...

253675 No

253676 Yes

253677 No

253678 No

253679 Yes

Name: Diabetes_binary, Length: 253680, dtype: object

X

| HighBP | HighChol | CholCheck | BMI | Smoker | Stroke | HeartDiseaseorAttack | PhysActivity | Fruits | Veggies | ... | AnyHealthcare | NoDocbcCost | GenHlth | MentHlth | PhysHlth | DiffWalk | Sex | Age | Education | Income | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Yes | Yes | Yes | 40.0 | Yes | No | No | No | No | Yes | ... | Yes | No | 5.0 | 18.0 | 15.0 | Yes | Female | [60,65) | HighSchool | [15k, 20k) |

| 1 | No | No | No | 25.0 | Yes | No | No | Yes | No | No | ... | No | Yes | Fair | 0.0 | 0.0 | No | Female | [50,55) | CollegeGraduate | [0, 10k) |

| 2 | Yes | Yes | Yes | 28.0 | No | No | No | No | Yes | No | ... | Yes | Yes | 5.0 | 30.0 | 30.0 | Yes | Female | [60,65) | HighSchool | [75k, inf) |

| 3 | Yes | No | Yes | 27.0 | No | No | No | Yes | Yes | Yes | ... | Yes | No | Good | 0.0 | 0.0 | No | Female | [70,75) | SomeHighSchool | [35k,50k) |

| 4 | Yes | Yes | Yes | 24.0 | No | No | No | Yes | Yes | Yes | ... | Yes | No | Good | 3.0 | 0.0 | No | Female | [70,75) | SomeCollege | [20k,25k) |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 253675 | Yes | Yes | Yes | 45.0 | No | No | No | No | Yes | Yes | ... | Yes | No | Fair | 0.0 | 5.0 | No | Male | [40,45) | CollegeGraduate | [50k,75k) |

| 253676 | Yes | Yes | Yes | 18.0 | No | No | No | No | No | No | ... | Yes | No | Poor | 0.0 | 0.0 | Yes | Female | [70,75) | Elementary | [20k,25k) |

| 253677 | No | No | Yes | 28.0 | No | No | No | Yes | Yes | None | ... | Yes | No | VeryGood | 0.0 | 0.0 | No | Female | [25,30) | SomeCollege | [10k,15k) |

| 253678 | Yes | No | Yes | 23.0 | No | No | No | No | Yes | Yes | ... | Yes | No | Fair | 0.0 | 0.0 | No | Male | [50,55) | SomeCollege | [0, 10k) |

| 253679 | Yes | Yes | Yes | 25.0 | No | No | Yes | Yes | Yes | No | ... | Yes | No | Good | 0.0 | NaN | No | Female | [60,65) | CollegeGraduate | [10k,15k) |

253680 rows × 21 columns

Things to consider to be able to use the predictors and response properly with sklearn estimators:

They have to be made of numerical type objects (int or float).

They don’t have to contain missing values (NaN)

Otherwise an error will arise.

# Encoding the categorical variables using an ordinal encoder

enc = OrdinalEncoder()

Y = enc.fit_transform(Y.to_numpy().reshape(-1, 1)).flatten()

X_cat = enc.fit_transform(X[cat_predictors])

X_quant = X[quant_predictors].to_numpy()

X = np.column_stack((X_quant, X_cat))

X

array([[40., 18., 15., ..., 8., 2., 2.],

[25., 0., 0., ..., 6., 0., 0.],

[28., 30., 30., ..., 8., 2., 7.],

...,

[28., 0., 0., ..., 1., 4., 1.],

[23., 0., 0., ..., 6., 4., 0.],

[25., 0., nan, ..., 8., 0., 1.]])

# Filling the missing values using a simple imputer

simple_imputer = SimpleImputer(missing_values=np.nan, strategy='mean')

X = simple_imputer.fit_transform(X)

np.sum(np.isnan(X))

0

This is a naive approach since we are imputing the categorical variables also with the mean.

The most correct approach is to apply this separately for que quant and cat variables, applying one strategy for the first an another for the second. We will see this approach later.

Defining outer evaluation: train-test split#

# Defining the outer-evaluation: train-test split.

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, train_size=0.75, random_state=123, stratify=Y)

X_train.shape

(190260, 21)

X_test.shape

(63420, 21)

Defining inner evaluation: K-Fold Cross Validation#

# Defining the inner-evaluation: k-fold cross

inner = StratifiedKFold(n_splits=3, shuffle=True, random_state=123)

# inner = Fold(n_splits=3, shuffle=True, random_state=123)

Defining some models#

knn = KNeighborsClassifier(n_neighbors=5, metric='euclidean')

tree = DecisionTreeClassifier(max_depth=10, min_samples_split=2, min_samples_leaf=3, random_state=123)

RF = RandomForestClassifier(n_estimators=40, max_features=0.8, max_depth=10, min_samples_split=2, min_samples_leaf=3, random_state=123)

models = [knn, tree, RF]

model_names = ['knn', 'tree', 'RF']

Training a model (fit method)

RF.fit(X=X_train, y=Y_train)

RandomForestClassifier(max_depth=10, max_features=0.8, min_samples_leaf=3,

n_estimators=40, random_state=123)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

RandomForestClassifier(max_depth=10, max_features=0.8, min_samples_leaf=3,

n_estimators=40, random_state=123)Predicting using a model (predict method)

# Training predictions

Y_train_hat = RF.predict(X=X_train)

# Testing predictions

Y_test_hat = RF.predict(X=X_test)

Computing a score

# Training score

balanced_accuracy_score(y_pred=Y_train_hat, y_true=Y_train)

0.5716832507119044

# Testing score

balanced_accuracy_score(y_pred=Y_test_hat, y_true=Y_test)

0.5574103382707574

Applying inner evaluation#

Without HPO#

scores_arr = cross_val_score(X=X_train, y=Y_train, estimator=RF, scoring='balanced_accuracy', cv=inner)

scores_arr

array([0.55845631, 0.5590863 , 0.55492997])

np.mean(scores_arr)

0.5574908591434647

scores = {}

for model, name in zip(models, model_names):

print(model)

scores_arr = cross_val_score(X=X_train, y=Y_train, estimator=model, scoring='balanced_accuracy', cv=inner)

print(scores_arr)

scores[name] = np.mean(scores_arr)

KNeighborsClassifier(metric='euclidean')

[0.56353619 0.5624154 0.55945893]

DecisionTreeClassifier(max_depth=10, min_samples_leaf=3, random_state=123)

[0.56811021 0.5637455 0.55970761]

RandomForestClassifier(max_depth=10, max_features=0.8, min_samples_leaf=3,

n_estimators=40, random_state=123)

[0.55845631 0.5590863 0.55492997]

scores

{'knn': 0.5618035076772085,

'tree': 0.5638544376891831,

'RF': 0.5574908591434647}

With HPO#

Grid Search#

param_grid = {'n_estimators': [25, 40],

'max_features': [0.5, 0.8],

'max_depth': [2, 5],

'min_samples_split': [2],

'min_samples_leaf': [3]}

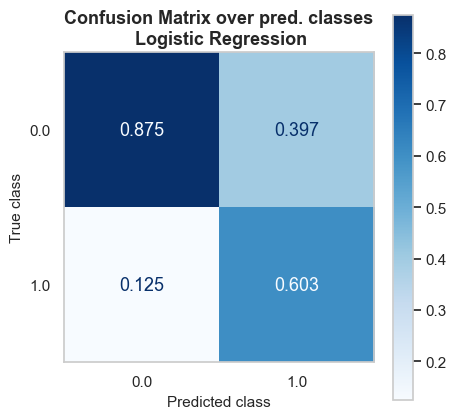

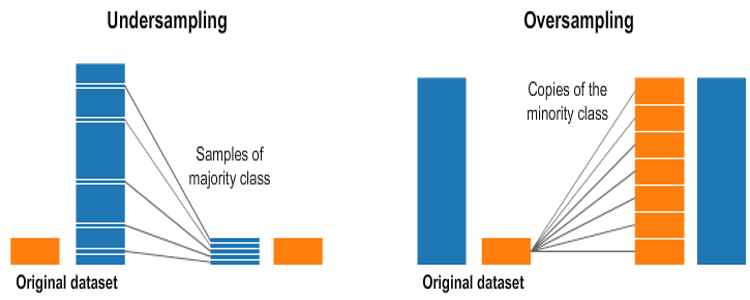

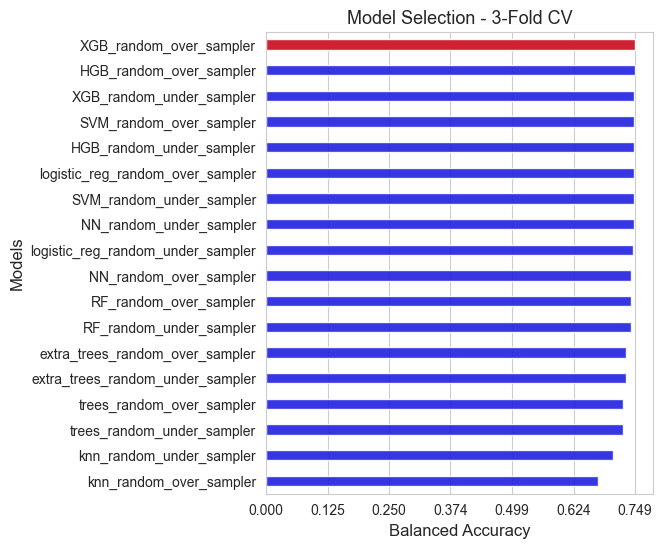

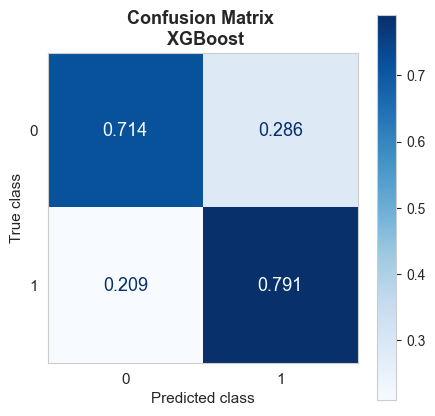

grid_search = GridSearchCV(estimator=RF, param_grid=param_grid, cv=inner, scoring='balanced_accuracy', verbose=True)